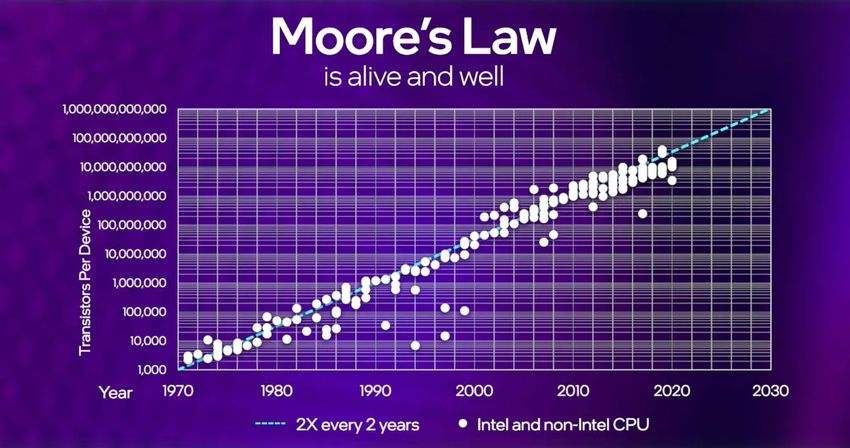

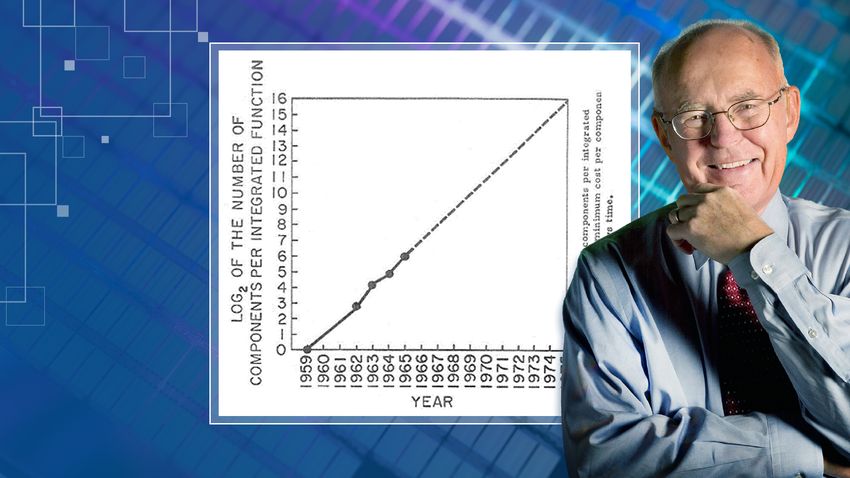

The evolution of artificial intelligence (AI) is closely intertwined with advances in computing hardware. Among the key principles driving this progress is Moore’s Law, a prediction made in 1965 by Gordon Moore, co-founder of Intel. He observed that the number of transistors on a microchip doubles approximately every two years, leading to exponential growth in computing power while reducing costs. Although Moore’s Law is not a physical law but an empirical observation, its influence on AI’s growth cannot be overstated.

What is Moore’s Law?

Moore’s Law is the observation that the number of transistors on a microchip doubles approximately every two years, while the cost of computing power decreases. This prediction, made by Gordon Moore in 1965, has guided the semiconductor industry for decades. The doubling of transistors has led to exponential improvements in processing power, enabling advances in a wide range of fields, including artificial intelligence. Although the pace of transistor scaling has slowed in recent years due to physical limitations, the impact of Moore’s Law remains significant in shaping technological progress.

Moore’s Law: A Catalyst for AI’s Growth

1. Enabling Early AI Algorithms

In the 1950s and 1960s, AI research was in its infancy. Algorithms like the perceptron, one of the earliest neural networks, required computational resources that were expensive and limited. The rapid increase in computing power predicted by Moore’s Law made it feasible to run more complex algorithms, sparking the first AI boom in the 1980s.

2. Democratization of Computing Power

As transistors became smaller and cheaper, personal computers and servers became more accessible. This democratization allowed universities, startups, and individual researchers to experiment with AI, accelerating innovation and diversifying research areas.

3. The Rise of Deep Learning

Deep learning, the backbone of modern AI, relies heavily on massive computational resources. The exponential growth in GPU performance, a byproduct of Moore’s Law, enabled researchers to train deep neural networks on large datasets. For example, AlexNet, the breakthrough model in computer vision, was trained on GPUs, which were made powerful and cost-effective thanks to Moore’s prediction.

Impacts on Specific AI Milestones

1. Machine Learning and Big Data

As computing power increased, so did our ability to process and analyze big data. This symbiotic relationship between Moore’s Law and data availability allowed machine learning algorithms to flourish. Faster processors enabled real-time data processing, unlocking applications in fields like natural language processing (NLP), fraud detection, and personalized recommendations.

2. Advancements in Natural Language Processing

Models like GPT (Generative Pre-trained Transformers) are computationally intensive, requiring billions of parameters and massive datasets. The growth in computational power predicted by Moore’s Law has been instrumental in making such models practical. For instance, GPT-3, developed by OpenAI, required hundreds of petaflop-days of computing power, which would have been inconceivable without decades of hardware advancements.

3. Autonomous Systems

From self-driving cars to robotics, autonomous systems demand real-time decision-making and processing of sensor data. Moore’s Law facilitated the miniaturization and performance improvements in embedded systems, enabling AI-powered devices to operate efficiently in real-world environments.

Challenges and the Future Beyond Moore’s Law

While Moore’s Law has driven AI’s progress for decades, physical limitations in transistor miniaturization are becoming apparent. This slowdown has prompted the industry to explore alternative pathways:

1. Specialized Hardware

To address the end of Moore’s Law, companies are developing specialized hardware for AI workloads. Examples include GPUs, TPUs (Tensor Processing Units), and neuromorphic chips designed specifically for machine learning tasks. These innovations continue to push the boundaries of AI performance.

2. Quantum Computing

Quantum computing holds promise for solving problems that are computationally infeasible for classical computers. While still in its infancy, quantum computing could redefine the trajectory of AI by enabling breakthroughs in optimization, cryptography, and complex simulations.

3. Energy Efficiency

As AI models grow larger, energy consumption becomes a critical concern. Advances in energy-efficient hardware and algorithms are crucial for sustainable AI development, ensuring continued progress without overwhelming energy resources.

Educational Takeaways

- Exponential Growth Fuels Innovation Moore’s Law demonstrates how exponential growth in hardware capabilities drives innovation across industries, particularly in AI.

- Hardware and Software Co-Evolution The interplay between hardware advancements and software innovation is essential. As computing power increases, AI algorithms evolve to leverage these capabilities effectively.

- Preparing for a Post-Moore Era Understanding the challenges posed by the limits of Moore’s Law highlights the need for interdisciplinary approaches, combining AI research with hardware engineering, energy efficiency, and quantum computing.

Conclusion

The impact of Moore’s Law on AI’s evolution is profound, shaping the field from its early days to its current state. By providing the computational foundation for increasingly complex algorithms, Moore’s prediction has enabled AI to tackle problems once thought insurmountable. As we approach the limits of transistor miniaturization, the AI community must embrace new paradigms to sustain progress. Whether through specialized hardware, quantum computing, or energy-efficient designs, the legacy of Moore’s Law will continue to inspire innovation in AI for years to come.