Introduction

In recent years, two groundbreaking AI models have emerged as leaders in natural language processing (NLP) and text generation: GPT (Generative Pretrained Transformer) and BERT (Bidirectional Encoder Representations from Transformers). Both have transformed the way machines interpret, understand, and generate human language, powering applications from chatbots to content generation. But how do GPT and BERT work, and what makes them unique? In this article, we’ll explore the key differences between these two models, their capabilities, and how they’re applied in AI text generation.

Understanding the Basics of GPT and BERT

Before diving into the technicalities, it’s important to understand what GPT and BERT are and why they’ve made such an impact.

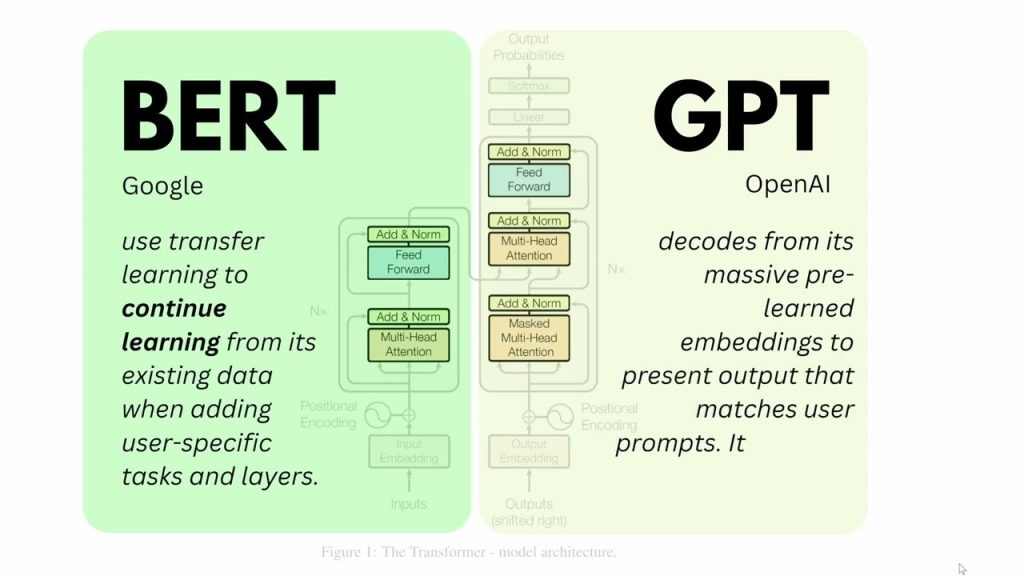

- GPT (Generative Pretrained Transformer): Developed by OpenAI, GPT is designed to generate text based on prompts. It’s a unidirectional model, meaning it reads and processes text from left to right. This sequential structure makes GPT highly effective for text generation tasks, where the model predicts and generates the next word in a sequence.

- BERT (Bidirectional Encoder Representations from Transformers): Created by Google, BERT is designed for understanding the meaning of language in context. Unlike GPT, BERT is bidirectional, meaning it reads text both forwards and backwards. This dual perspective enables BERT to grasp the full context of words in a sentence, making it highly effective for language understanding tasks like question answering and sentiment analysis.

In summary:

- GPT: Primarily used for text generation.

- BERT: Primarily used for text comprehension.

Key Technical Differences

1. Architecture and Directionality

- GPT’s Unidirectional Architecture: GPT’s architecture is unidirectional, processing text in a left-to-right order. This approach enables GPT to predict the next word based on the words that come before it, making it ideal for text generation. However, it’s not as strong in understanding the context that may come after the word.

- BERT’s Bidirectional Architecture: BERT is bidirectional, allowing it to process text from both directions simultaneously. This means BERT can understand the context of a word based on the entire sentence, both preceding and following it. This bidirectional context makes BERT exceptional for tasks that require deep understanding.

2. Training Methodology

- GPT’s Autoregressive Training: GPT uses an autoregressive training method, which means it predicts the next word in a sequence based on the words that came before. This training method is well-suited for tasks where generating new text is essential.

- BERT’s Masked Language Model (MLM): BERT uses masked language modeling during training. It masks certain words in a sentence and trains the model to predict these missing words based on the context. This method enhances BERT’s ability to understand the nuances of language by forcing it to “fill in the blanks” from both directions.

3. Applications

- GPT’s Applications in Text Generation: Because of its unidirectional and predictive nature, GPT is commonly used for tasks like text completion, storytelling, conversation generation, and other forms of creative writing. Models like GPT-3 are widely known for their ability to produce human-like text, making them popular in content creation and chatbot applications.

- BERT’s Applications in Text Understanding: BERT excels at language comprehension, making it suitable for tasks such as question answering, text classification, and sentiment analysis. BERT-based models are frequently used in search engines, virtual assistants, and other applications where accurate language understanding is essential.

GPT in Action – Text Generation Powerhouse

GPT’s popularity stems from its remarkable ability to generate coherent and contextually accurate text. Here’s a closer look at how GPT works and what makes it stand out:

How GPT Generates Text

- Prompt Input: The user provides a prompt, such as a sentence or question, and the model begins by encoding this prompt.

- Sequential Prediction: GPT processes the text sequentially, predicting one word at a time based on the context of the prompt.

- Fine-Tuning Options: With large models like GPT-3, users can control “temperature” and “top-k” parameters to adjust the creativity and coherence of the generated text.

Applications of GPT

- Content Creation: Many companies use GPT for generating blog posts, product descriptions, and creative writing.

- Chatbots and Virtual Assistants: GPT models power conversational agents that can handle customer inquiries or provide information.

- Social Media Content: Influencers and brands use GPT to generate captions, tweets, and posts.

GPT’s primary strength lies in generating coherent, contextually relevant text based on limited input, making it ideal for applications where content creation is required.

BERT in Action – Master of Language Understanding

Unlike GPT, BERT focuses on understanding language at a deeper level. Its bidirectional approach enables BERT to grasp the context and relationships between words within a sentence, giving it a significant advantage in language comprehension tasks.

How BERT Understands Text

- Masked Word Prediction: During training, BERT masks random words in each sentence and learns to predict them based on surrounding words.

- Contextual Encoding: BERT encodes sentences by examining words both before and after the masked word, gaining a full contextual understanding of each word’s role.

- Bidirectional Context: By learning from both directions, BERT captures the subtle nuances of language, including idiomatic expressions and complex sentence structures.

Applications of BERT

- Search and Information Retrieval: BERT is widely used in search engines to provide relevant and accurate results based on user queries.

- Sentiment Analysis: Many companies use BERT for analyzing customer sentiment in reviews, social media posts, and feedback.

- Question Answering Systems: Virtual assistants use BERT to answer user questions accurately by understanding context and intent.

BERT’s strength lies in language comprehension, making it invaluable for applications where accurate interpretation of meaning is critical.

Strengths and Limitations of GPT and BERT

Strengths

- GPT:

- Excellent for text generation, creative writing, and chatbots.

- Produces coherent, contextually relevant language based on minimal prompts.

- BERT:

- Exceptional at language understanding, making it ideal for search engines, sentiment analysis, and question-answering.

- Strong in interpreting context by examining language bidirectionally.

Limitations

- GPT:

- Limited in understanding the full context since it only processes text in one direction.

- Prone to generating inaccurate or nonsensical information if the prompt is ambiguous.

- BERT:

- Not optimized for text generation; it’s better suited for language interpretation tasks.

- Can struggle with tasks requiring extended output, like storytelling.

How GPT and BERT Are Shaping AI Text Generation

GPT and BERT have opened the doors to a wide range of applications across industries:

- Content Creation and Marketing: GPT’s ability to generate high-quality text makes it an invaluable tool for content creators, marketers, and businesses looking to scale content production.

- Search and Customer Support: BERT’s advanced language comprehension enhances search engines and customer support systems, delivering more accurate responses and improving user experience.

- Education and Training: Both models are used to create personalized educational content, answer student questions, and generate study materials.

Conclusion

GPT and BERT have redefined what’s possible in AI text generation and comprehension. With GPT’s prowess in creating coherent text and BERT’s deep understanding of language, these models are paving the way for smarter, more responsive applications across industries. While they each have unique strengths and limitations, GPT and BERT complement each other in advancing the field of NLP, enabling machines to understand and generate human language like never before.

Whether used independently or together, GPT and BERT are powerful tools that continue to shape the future of AI and open new possibilities for how we interact with machines in our daily lives.