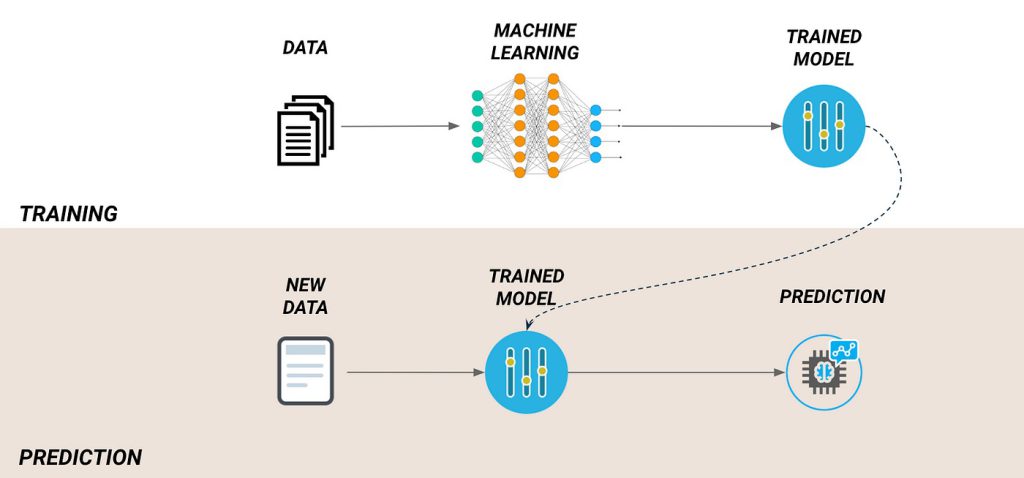

Artificial intelligence (AI) is revolutionizing industries across the globe, and many businesses and researchers are eager to develop their own AI models tailored to specific needs. However, training an AI model is a complex process fraught with challenges. Understanding these difficulties and their solutions can help streamline the process and lead to better outcomes. This article explores the most common challenges faced when training an AI model and practical solutions to overcome them.

1. Data Availability and Quality

Challenge:

One of the most significant hurdles in training an AI model is obtaining high-quality, relevant data. Many datasets contain missing values, biases, or noise, which can negatively impact model performance.

Solution:

- Use data augmentation techniques to increase dataset size and diversity.

- Employ data preprocessing techniques like normalization, handling missing values, and removing outliers.

- Use synthetic data generation techniques where real data is limited.

- Regularly audit datasets for biases and imbalances and apply techniques like oversampling and undersampling.

2. Computational Resources

Challenge:

Training deep learning models requires significant computational power, often needing high-end GPUs, TPUs, or cloud-based solutions, which can be expensive.

Solution:

- Use cloud-based platforms like AWS, Google Cloud, or Microsoft Azure to access scalable computing resources.

- Optimize code by using efficient data loading techniques and leveraging hardware accelerations such as TensorFlow XLA or PyTorch JIT.

- Consider distributed training across multiple machines to reduce training time.

3. Overfitting and Underfitting

Challenge:

Models often suffer from overfitting (memorizing training data but failing on new data) or underfitting (failing to learn patterns effectively).

Solution:

- Use techniques like dropout, batch normalization, and L1/L2 regularization to reduce overfitting.

- Increase the amount of training data or use transfer learning to improve generalization.

- Fine-tune hyperparameters to balance model complexity and performance.

4. Hyperparameter Tuning

Challenge:

Selecting the right hyperparameters (learning rate, batch size, activation functions, etc.) is crucial for optimal performance but can be time-consuming.

Solution:

- Use automated hyperparameter optimization tools like Grid Search, Random Search, or Bayesian Optimization.

- Experiment with learning rate schedules and adaptive learning rates like Adam or RMSprop.

- Conduct cross-validation to evaluate the impact of different hyperparameter settings.

5. Model Interpretability

Challenge:

Black-box AI models can be difficult to interpret, making it challenging to understand decision-making processes.

Solution:

- Use explainability tools like SHAP, LIME, and Grad-CAM to visualize model decisions.

- Prefer interpretable models where possible, such as decision trees and linear models.

- Incorporate model monitoring dashboards to track predictions and anomalies.

6. Scalability Issues

Challenge:

Deploying AI models at scale can lead to issues with latency, reliability, and cost.

Solution:

- Use model compression techniques like quantization and pruning to reduce inference time.

- Deploy models using containerized solutions like Docker and Kubernetes for scalable infrastructure.

- Consider serverless computing and edge AI for efficient real-time processing.

7. Ethical and Bias Concerns

Challenge:

AI models can inherit biases from training data, leading to unfair or discriminatory outcomes.

Solution:

- Implement fairness-aware machine learning techniques to mitigate biases.

- Use diverse datasets that represent different demographics and scenarios.

- Conduct regular audits to evaluate and address ethical concerns in AI predictions.

8. Keeping Up with Rapid Advances

Challenge:

AI is evolving rapidly, making it difficult to stay updated with the latest research, frameworks, and best practices.

Solution:

- Follow AI research papers on platforms like arXiv, Google Scholar, and IEEE Xplore.

- Participate in AI communities, forums, and online courses to stay informed.

- Continuously refine models based on new methodologies and emerging trends.

Conclusion

Training an AI model involves overcoming several challenges, from data quality and computational resources to ethical considerations and scalability. By implementing the right strategies and leveraging available tools, developers can enhance their AI models’ performance, reliability, and fairness. Staying updated with the latest advancements and best practices is crucial for developing robust AI solutions.