Introduction

The world of artificial intelligence has made incredible strides in recent years, especially in the field of natural language processing (NLP). One of the most fascinating applications of NLP is AI text generation, where AI models create coherent, contextually relevant text that mimics human writing. From chatbots to content creation, AI-generated text is now a part of our daily lives. But how does it work? In this article, we’ll break down the mechanics of AI text generation, diving into the neural networks and NLP techniques that power this groundbreaking technology.

Understanding the Basics of NLP

Before we delve into the technicalities of AI text generation, it’s essential to understand Natural Language Processing (NLP). NLP is a branch of artificial intelligence that focuses on enabling machines to understand, interpret, and generate human language. NLP combines linguistics, computer science, and machine learning to process language in a way that makes sense to both humans and machines.

Key NLP Techniques:

- Tokenization: Breaking down sentences into smaller units, such as words or phrases, which the AI can then process individually.

- Stemming and Lemmatization: Reducing words to their base forms (e.g., “running” becomes “run”), helping the AI understand root meanings.

- Part-of-Speech Tagging: Identifying grammatical categories like nouns, verbs, and adjectives to analyze sentence structure.

- Named Entity Recognition (NER): Detecting entities like names, locations, and dates, helping AI understand context.

Neural Networks in Text Generation

The backbone of AI text generation lies in neural networks, particularly deep learning architectures designed to process and generate language. Unlike traditional machine learning models, neural networks work by simulating the way neurons in the human brain connect and process information. For text generation, these networks use multiple layers to understand context and predict language.

Types of Neural Networks Used in NLP:

- Recurrent Neural Networks (RNNs):

- RNNs are designed to handle sequential data, making them ideal for text and language processing. They retain information from previous steps, allowing them to understand context over a sequence of words.

- Limitations: RNNs struggle with long sentences and may lose context over lengthy sequences.

- Long Short-Term Memory Networks (LSTMs):

- LSTMs are a type of RNN specifically built to handle long-term dependencies, maintaining context over extended sequences. This makes them more accurate for complex sentences and paragraphs.

- Limitations: While more effective than RNNs, LSTMs can still struggle with large datasets and complex language patterns.

- Transformers:

- Transformers have revolutionized text generation. Unlike RNNs and LSTMs, transformers use an attention mechanism to process all words in a sentence simultaneously, enabling them to retain context more effectively.

- Examples of Transformer Models: GPT (Generative Pretrained Transformer) and BERT (Bidirectional Encoder Representations from Transformers) are both based on transformer architecture.

How Text Generation Models Work

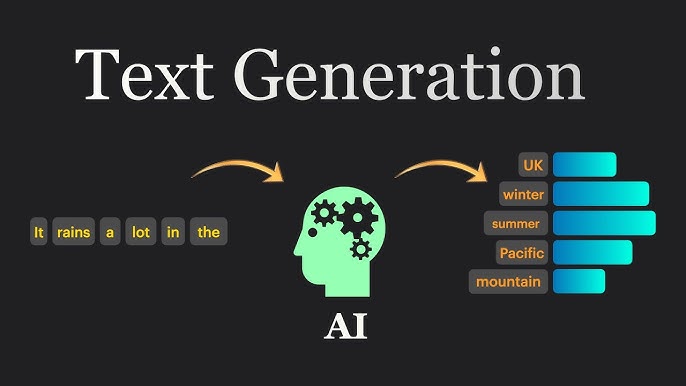

Now that we’ve covered the basics, let’s dive into the text generation process. Modern text generation involves a few critical stages:

Step 1: Preprocessing the Text

The input text goes through several preprocessing steps to convert human language into a format the model can understand.

- Tokenization: Text is broken down into tokens (words or subwords).

- Embedding: Each token is converted into a vector, which is a numerical representation that captures its meaning in relation to other words.

Step 2: Encoding the Text

Using a neural network (typically a transformer), the AI encodes the text to understand its context, tone, and structure. Encoding helps the model interpret the relationships between words, capturing nuances like sarcasm, emotion, and implied meaning.

Step 3: Decoding and Text Generation

After encoding, the AI model generates new text by predicting the next word based on the prompt and its encoded context. It does this iteratively, word by word, until it completes the text.

Temperature and Top-k Sampling: During this stage, parameters like temperature and top-k sampling control randomness. A high temperature creates more diverse outputs, while a low temperature produces more predictable text. Top-k sampling limits the model’s choices to the top-k most likely words, improving coherence.

Popular Text Generation Models

Several well-known models have emerged for text generation, each with unique strengths and applications. Let’s look at a few of the most popular ones:

- GPT-3 (Generative Pretrained Transformer 3):

- Developed by OpenAI, GPT-3 is one of the most advanced models for text generation, capable of producing highly coherent and contextually relevant text from simple prompts.

- Applications: Chatbots, content creation, Q&A systems.

- BERT (Bidirectional Encoder Representations from Transformers):

- BERT is a transformer-based model designed for NLP tasks, including language understanding and question-answering. Although it’s not inherently generative, it has inspired subsequent models for language production.

- Applications: Text classification, sentiment analysis, language translation.

- T5 (Text-To-Text Transfer Transformer):

- Created by Google, T5 reframes various NLP tasks (translation, summarization, etc.) as text generation tasks. Its flexibility and performance make it a powerful option for complex text generation.

- Applications: Summarization, paraphrasing, and more.

Challenges and Limitations

While AI text generation has made significant advancements, it’s not without challenges:

- Lack of Real-World Understanding: Despite its impressive results, AI text generation lacks genuine comprehension. Models generate text based on patterns, not real knowledge, which can lead to inaccuracies or strange responses.

- Bias in Generated Text: Since AI models are trained on large datasets sourced from the internet, they may inadvertently learn and replicate biases present in the data.

- Over-Reliance on Prompts: AI text generation is prompt-dependent. Small changes in wording can drastically alter output quality, requiring trial and error to produce high-quality results.

- Resource Intensive: Large models like GPT-3 require significant computational resources, which can limit accessibility for smaller businesses or individuals.

Conclusion

AI text generation combines the power of neural networks and NLP to create coherent, contextually accurate language. With models like GPT-3 and BERT, machines have become capable of generating text that closely mimics human writing. Despite current limitations, the potential applications for AI text generation in content creation, customer support, marketing, and education are vast and continually expanding. As technology advances, we can look forward to even more sophisticated and accessible tools that bring AI-generated text into our everyday lives.