Whether you’re just stepping into the world of Artificial Intelligence or scaling your deep learning experiments, choosing the right GPU can make all the difference. NVIDIA has long been the go-to brand for AI development due to its CUDA architecture, Tensor Cores, and robust developer support.

Here’s our curated list of the Top 10 NVIDIA GPUs ideal for learners, developers, and enthusiasts building the future with AI.

🥇 1. NVIDIA RTX 4090

-

VRAM: 24 GB GDDR6X

-

Architecture: Ada Lovelace

-

Why It Rocks: The ultimate powerhouse for single-GPU AI workloads—supports large models, blazing fast training, and real-time inference.

🥈 2. NVIDIA RTX A6000

-

VRAM: 48 GB GDDR6 ECC

-

Architecture: Ampere

-

Why It Rocks: Designed for enterprise-grade AI training, LLM fine-tuning, and massive simulation workloads.

🥉 3. NVIDIA RTX 4080

-

VRAM: 16 GB GDDR6X

-

Architecture: Ada Lovelace

-

Why It Rocks: Perfect for deep learning, computer vision, and image generation models like Stable Diffusion.

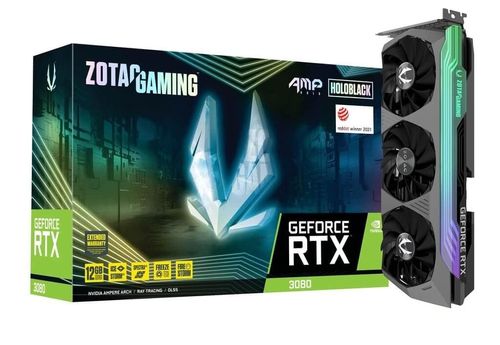

4. NVIDIA RTX 3090

-

VRAM: 24 GB GDDR6X

-

Architecture: Ampere

-

Why It Rocks: Excellent for high-end AI development, still very relevant with broad support.

5. NVIDIA RTX 4070 Ti Super

-

VRAM: 16 GB GDDR6X

-

Architecture: Ada Lovelace

-

Why It Rocks: Great balance of efficiency, power, and affordability for developers scaling up.

6. NVIDIA RTX 3080 Ti

-

VRAM: 12 GB GDDR6X

-

Architecture: Ampere

-

Why It Rocks: Solid GPU for heavy models and stable training performance.

7. NVIDIA RTX 3060 (12GB)

-

VRAM: 12 GB GDDR6

-

Architecture: Ampere

-

Why It Rocks: Ideal for entry-level model training and AI education with great VRAM capacity.

8. NVIDIA RTX 3070 Ti

-

VRAM: 8 GB GDDR6X

-

Architecture: Ampere

-

Why It Rocks: A solid performer for intermediate-level AI development. While its VRAM is slightly limited, it shines with faster clock speeds and more CUDA cores

9. NVIDIA RTX 4060 Ti (16GB)

-

VRAM: 16 GB GDDR6

-

Architecture: Ada Lovelace

-

Why It Rocks: Perfect for learners who want future-proof memory in a mid-range card.

10. NVIDIA GTX 1660 Super

-

VRAM: 6 GB GDDR6

-

Architecture: Turing (No Tensor Cores)

-

Why It Rocks: Great for absolute beginners experimenting with basic models and frameworks.

🧠 What to Look for in a GPU for AI

-

CUDA & Tensor Cores: Necessary for high-performance matrix computations and acceleration.

-

VRAM: A minimum of 12 GB is recommended for meaningful model training.

-

Software Compatibility: Ensure support for CUDA, cuDNN, and AI frameworks like TensorFlow, PyTorch.

🧰 Budget-Based GPU Picks for AI Developers

| Budget Range | Recommended GPU |

|---|---|

| ₹30K – ₹50K (~$350-$600) | RTX 3060 (12GB) |

| ₹60K – ₹90K (~$700-$1100) | RTX 4070 / 4070 Ti |

| ₹1.2L+ (~$1300+) | RTX 4090 |

🎯 Final Recommendation: Go All-In with the RTX 4090

If you’re an AI learner evolving into an enthusiast or pro-level developer, the NVIDIA RTX 4090 is hands-down the most powerful and future-proof choice on the market right now.

With its massive 24 GB GDDR6X VRAM, cutting-edge Ada Lovelace architecture, and blazing-fast Tensor and CUDA core performance, it handles everything from LLM fine-tuning to real-time image generation, reinforcement learning, and multi-modal AI.

Whether you’re:

-

Training Stable Diffusion models

-

Working on AI-powered SaaS tools

-

Experimenting with generative AI apps

-

Building your own LLM

👉 The RTX 4090 won’t just support you — it will supercharge you.